¶ RISC vs CISC

Reduced Instruction Set Computers (RISC) VS Complex Instruction Set Computers (CISC)

Computers are everywhere, including some places you wouldn't expect one to be. Servers, Desktop, Laptops, Tablets, and Cellphones are some of the most commonly recognized “Computers”, but Computers don't have to be complex or powerful in ways we're used to. Other common “computers” are things you use every day and never think about. TVs, Wireless Routers, dishwashers, ovens, even vehicles use computers called ECUs (Engine Control Unit). Computers are everywhere and can range from the world class super computers in data centers to the cheapest toy drone that children play with. These difference in purposes result in a wide range of needs and not every chip type best fits that scenario. Nobody would want a phone where the battery last one hour and nobody wants a server that can't handle its intended workload, so this results in different types of chips. Even though we have more than the 2 listed here (eg. SIMD is used for GPUs), we tend to classify many CPUs in one of two groups: RISC or CISC. Lets take a look at how they are different.

¶ Complex Instruction Set Computers (CISC)

Pros of CISC:

- 100-200+ possible instructions

- Complexity in hardware results in less complex code and less complexity required from people.

- New Instructions can be easily introduced without removing backward compatibility.

- Instructions are High level resulting in less complex compilers

- Instructions allow direct access to memory locations

- Hardware breaks down complex instructions in simpler code, meaning less memory is required.

- Usually upgradable

Cons of CISC:

- More Instructions means more transistors meaning more power consumption, more heat, and more cost.

- Not all software needs complex instructions meaning extra functionality goes to waste

- CISC is more like a Truck than a 2 seated Car. While it has the ability to carry and perform many possibilities, in a drag race the heavier vehicle will be slower. This means all the additional instructions support results in a slow per clock cycle than RISC

Its not all bad for CISC. Many would be surprised as to what a “complex” instruction is. Do you use encryption or maybe compression? These are two VERY common complex instructions. Its the difference between using a common calculator with basic functions (RISC-like example) as it takes several operations to reach an answer compared to using a TI-84 graphing calculator (CISC-like example) with a built in function that just accepts the variables and spits out the answer in one operation. But realistically we know common usage by the common individual means 90% of the time we aren't using complex instructions on their computers.

¶ Reduced Instruction Set Computers (RISC)

Pros of RISC:

- 30-40 Instructions

- Less instructions means more efficient code

- A single clock cycle is equal to a single instruction resulting in faster execution of simpler tasks

- Limits to instructions result in less ways to do things making everything more uniform

- Few instructions means less transistors meaning less cost, less size, less heat and less power consumption

Cons of RISC:

- Usually soldered onboard, no upgradability.

- Performance and capabilities all programs HEAVILY depend on compilers and programmers a.k.a. developers must exert more effort and have greater knowledge.

- Compilers have to break down High level instructions since the hardware cannot, meaning the process needs more RAM and more clock cycles.

- Translating CISC to RISC increases code size. Those complex instructions aren't handled in hardware anymore, you need to break them up and store them in RAM.

- RISC processors require large memory caches to feed the instructions and keep them primed.

- Capabilities, features, and advantages depend on specific architecture meaning less backward compatibility.

Its not all bad for RISC either. Its nice to have a computer in your pocket that has battery life of all day and isn't burning your leg. We also know, you don't use a tablet or a phone for heavy duty computing needs so the additional functionality is something rarely needed in these mobile devices. Most people wouldn't realize they are missing any features or instructions considering they would probably never use them for their everyday activities of browsing facebook, watching videos, or taking photos.

¶ Consumer vs Workstation/Server Grade CPUs

What is different from a Xeon and a Core i7? Its crazy how often I ask this question and get a bad answer. In many interviews I've heard people try to confidently fake their way to a job. When asking someone if they could build their own server with money being no object, its extremely common to hear they'd choose a Core i7 and add a gaming GPU. When asking why they don't choose a Xeon for their server I get blank stares and a common reply is “I don't like AMD stuff”. This immediately shows the person has no clue what they're talking about. I'm not against adding GPUs to servers, they can easily serve a purpose in a server, but I rarely get a good answer as to why they want it in a SERVER. Some have been great answers such as folding@home, remotely building 3D models, or some kind of compiler functions that can use GPUs. Others have been terrible claiming Servers have more cores therefore making them way better for gaming which is absolutely false. Understanding the differences in these different grades is crucial. This also applies to the differences between a basic AMD Ryzen (Consumer) and Threadripper/Epyc (Workstation/Server) CPU.

¶ Consumer Grade

- Do you like the ability to overclock? Sure, but not required.

- Do you want lower power usage? Preferably yes.

- Do you like gaming or high single thread performance? Most likely yes. If you're not gaming, single thread performance still gives you that snappy responsiveness.

- Do you need 32G of RAM or LESS? YES.

- Do you use the machine 24/7 with mission critical workloads? Not really.

- If the machine has an error, or shutdowns in the middle of use will it cost you Thousands or Millions? No.

- Do you want to pay lots (2x or more money) on a CPU guaranteed to run 24/7 with non-stop workloads? No

- Do you want something that gets the job done without breaking the bank? Yes.

Then you want a Consumer Grade CPU or computer. A perfect example would be I could buy a Core i7-10700 for $275 or I could buy a much slower Xeon Silver for over $500. It doesn't just stop there. If you buy a more expensive CPU, you're gonna pay for a more expensive motherboard to it with, as well as more expensive RAM and the trend of costing 2X+ continues. Consumer Grade equipment is really meant to serve a limited number of users (usually 1).

¶ Workstation and Server Grade

- Do you prefer stability and reliability over speed? Yes.

- Do you need ECC Memory (Error Checking and Correcting Memory)? Yes.

- Do you have multiple users using the system at the same time? Yes.

- Are you hosting mission critical jobs, databases, or data that could cost you money if corrupted or lost? Yes.

- Are you performing huge workloads that could see time reduction with better multi-threading performance? Yes.

- Do you need the machine to remain turned on 24/7? Yes.

- Could you possibly need or want dual CPUs? Yes.

- Do you need 32G of RAM or MORE? Most Likely, Yes.

- Do you need/want Quad or Octo-Channel Memory? For heavy workloads it can help to have multiple "RAM Highways".

- Do you need more than 1 or 2 GPUs? Its possible, yes.

Then you want Workstation or Server Grade components. Workstation components could use Consumer Grade amounts of RAM, but it all depends on the use case. If you need multiple GPUs for 3D model rendering (Pixar studios anyone? Game Devs? etc) you require your CPU offer enough PCIE lanes to support that many GPUs. Workstation and Server Grade CPUs offer more PCIE lanes than Consumer Grade. Depending on how large your jobs are, you may require more than 32G of RAM. These jobs are heavily threaded, so while single thread performance is helpful, its not king. More cores and threads means more work done in less time. Perfect example is I need to move 50 people. I could take a sports car and move people 2 at a time a little faster, or I could take a greyhound bus and move all 50 people at once just slower.

Workstation and Server Grade doesn't always mean more CPUs, cores, threads, more GPUs. Sometimes it just means more reliability, 24/7 uptime, error correction.

¶ The Practice of Binning CPUs

Many CPUs are the exact same part, but separated based on quality. When making CPUs the circuits are so small that the misplacement of a single atom on the silicon could pose a problem. Some cores may not function or constantly have errors. Some features may not work and may need disabled etc. This is how you could end up with an Intel i3 or i5, but in reality its a cutdown i7 that failed quality control tests and had to have the faulty parts disabled. Its still usable, but its not as powerful as the intended design. This cuts losses and still provides a viable product, especially to those who do not wish to pay top dollar for something they don't need. For most people surfing Facebook and Penterest or playing solitaire, an i3 is plenty. Its a Win-Win. Consumer gets cheaper parts that fit a need and the Manufacturer can still make profit and cut losses on part that don't meet Quality Assurance requirements. It also reduces the number of manufacturing facilities needed to make each version of chip as they can all be made from the exact same hardware manufacturing line. Less investing in machines and manufacturing facilities and increased ability to shift production to the demand. If demand of i7s is lower and i5s is higher, its a simple solution to just keep printing chips and use firmware to lock down the extra cores or laser cut pipelines to reduce performance.

Full Disclosure: I don't have any stock in either of the companies listed, I do not stand to gain anything by support for one or the other.

¶ Current Comparison

Firstly I want to say, go with whoever your research tells you to go with. You don't have to go by my page alone. There are always going to be things that Intel is better at as well as things AMD is better at. Based on your use case decided from there. AMD tends tends to have longer upgrade paths keeping a CPU socket around longer allowing for easier upgrades. AMD also allows Unbuffered ECC memory to be used with its chips so long as the motherboard manufacturer decided to enable it. AMD chips can seem to blur the lines between consumer and workstation grade. By allowing the use of Unbuffered ECC memory and consumer/workstation grade chips of up to 64-cores and 128-thread, AMD has remained fairly flexible about their chips and sockets and given the customer more options. Due to their larger core-count, High demand, and inability to keep stock, AMD has become the more expensive option as of late if you only look at CPUs. Be aware that Intel motherboards are notoriously more expensive, but there are always budget options for both brands. Intel CPUs being cheaper could save you money if all you want is a Gaming machine and aren't really super security minded (read the history). Lets face reality, hackers aren't usually gunning for smaller individuals, but larger corporate targets. Competition is good here as its reducing the prices making CPUs more affordable.

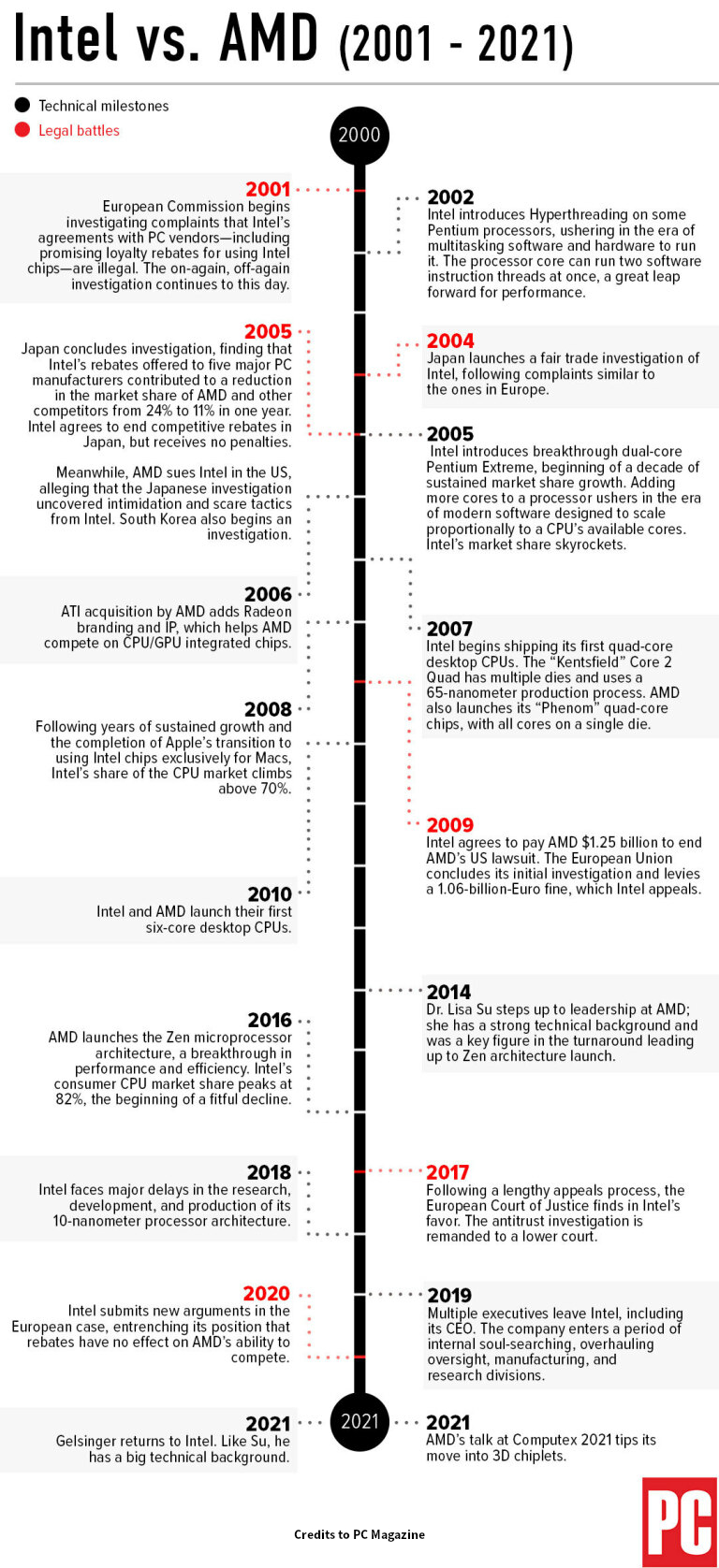

¶ History

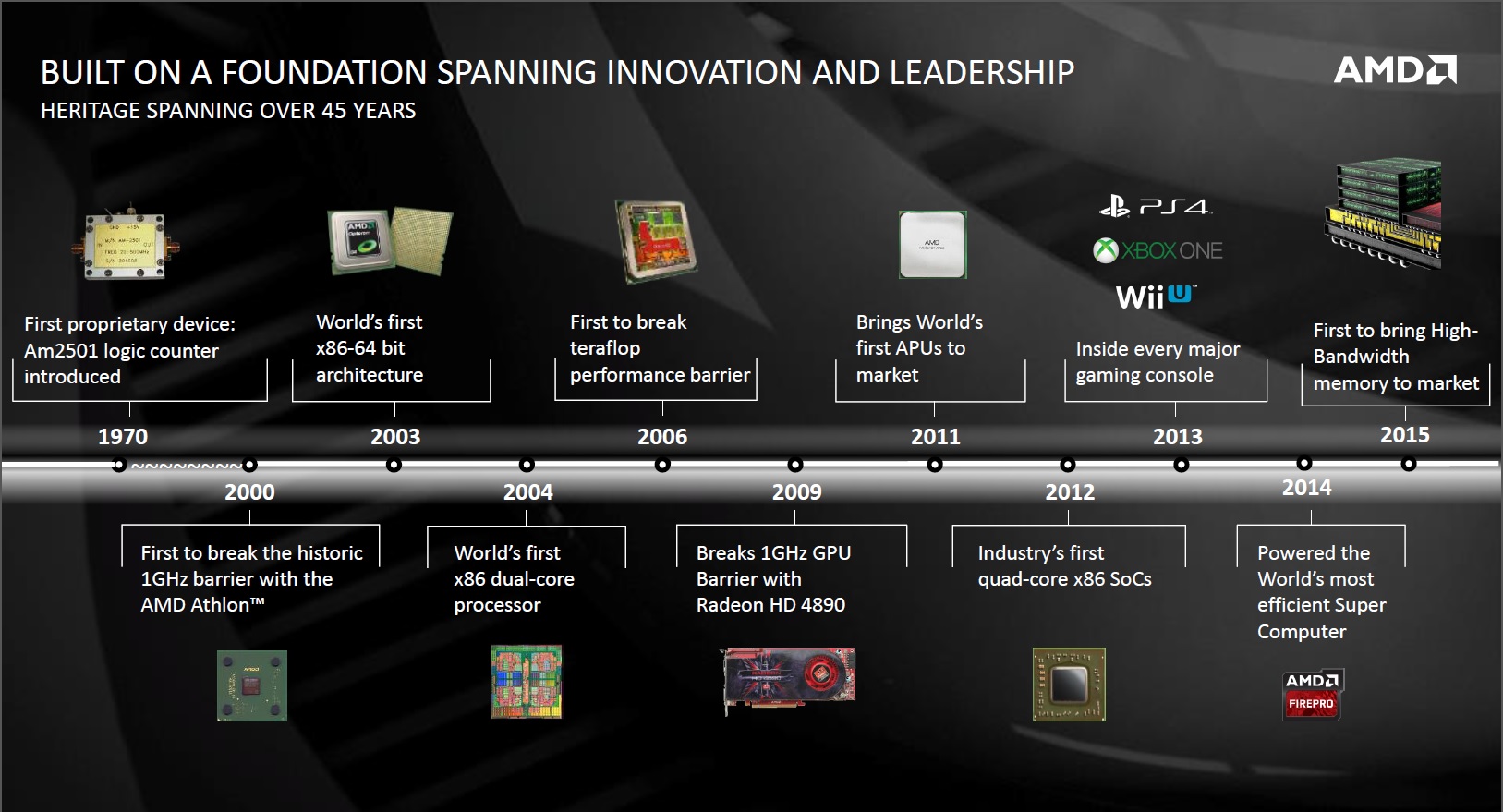

¶ Introduction

For the longest time the question has been, do I go with Intel or AMD. Intel is the original x86 CISC processor vendor. Many old-school users know Intel is the first, or the original and choose to use Intel based on that alone. Intel and AMD have duked out in performance battles for years. Early on during the Pentium days, it was a battle of trading blows. AMD was very innovative, competitive, and usually cheaper. But eventually AMD started to lose out. In 2011 AMD released its AMD FX line of processors to compete against Intel's new Core line. Both were each company's top tier processors, but AMD's had a flawed design in which multiple cores shared FPUs (Floating Point Unit). This greatly reduced the performance as each core was not truly independent of another core. AMD had other innovative wins, but the performance crown was no longer an option at this time. Intel also had the benefit of Hyper Threading (a.k.a. SMT Simultaneous Multi Thread). Until then AMD had continually won the ability to have better multi-threaded performance and if overclocked, possibility of comparable single-thread performance for cheaper. But Intel's new Core series processors were much better in single-threaded performance than AMD's FX line and the Multi-threaded performance didn't win by much even though Intel actually had half the number of cores (best AMD=8core/8thread, best Intel=4core/8thread). It was for several years that AMD seemed to lose every performance battle and were considered the budget option.

¶ AMD's Uphill Battle, and Intel's Anti-Trust behaviors

To make matters worse, Intel was no stranger to unlawful exclusionary practices making deals to block competitors and purchase the competition. Intel and AMD had a lawsuit in 1991 (partially dismissed do to statute of limitations) which was settled out of court in 1995. Intel was attempting to become a monopoly and in 1998 the FTC halted acquisitions of other companies to prevent this. Around that time, the FTC filed another antitrust ruling against Intel for threatening to stop selling microprocessors to companies as a method of leverage, pushing companies to drop the legal actions against intel for intel's abuse of microprocessor patents they held. In 1999 a settlement was reached for the 1998 anti-trust case, but the agreement was not an “admission of guilt”. In 2004 Japan claimed Intel violated antitrust laws again. Intel refuted the findings, but agreed to change business practices to appease them. Later the same year AMD filed another anti-trust lawsuit for anti-competitive behavior. AMD sued Intel for $50M in damages. In 2006 another anti-trust complaint was filed by AMD in Germany against Intel for making a deal for blocked a retailer from selling any computers based on AMD processors. During this time AMD had just come to an agreement to purchase ATI Graphics. This move was a gamble and nearly bankrupted AMD since all the anti-trust behavior was blocking the sale of their products. In 2007 the Intel was charged by the European Commission for paying or offering rebates to manufactures to delay or cancel AMD products as well as blocking the sale of AMD products. In 2008 Intel was again slammed with anti-trust charges by the EU Commission for again aiming to exclude competitors (AMD) from the market. In 2009, Intel was found guilty of the EU Commission's complaints. The US wasn't far behind in reaching the same conclusion later that year. Intel and AMD agreed to a settlement that resulted in AMD being paid $1.25B and AMD acquiring a cross-licensing deal.

By this time, AMD has been missing out on a lot of money for R&D. They were struggling to stay competitive and were always a year behind. $1.25B was nowhere near the amount of money lost during the time, but AMD already had a $2B loan they needed to make payments on due to buying ATI. ATI would end up being an extremely successful gamble for AMD, but their processor division was hurting. Their release of the AMD FX line in 2012 proved lacking. They had to sale the processors cheap to move product. They were effectively a good deal money-wise, but they weren't going to win the performance awards. AMD had also made literal gambling on their processors worth while. Since the processors were not locked and CPUs were mostly binned from same parts, you could buy a 6-core processor and unlock it to 8-cores if the other 2 cores were healthy all from within the BIOS. This allowed them to gain profits, but the margins weren't great. AMD struggled like this for 6+ years. In 2017 AMD released a product which breathed new life into its company and the Zen Architecture was born.

¶ AMD's Recovery and Intel's fall from Grace

AMD's new Zen Architecture was amazing. It was a completely new micro-architecture that included SMT (Simultaneous Muti Threading) to compete against Intel's HT (Hyper Threading). Intel had been the raining champion for performance for several years uncontested. Without competition, there's usually no real reason to struggle or improve your product. While Intel did improve the product over the years, the Improvements from year to year weren't exactly amazing. In many cases, new generation CPUs didn't really have much to entice people to spend the extra money on a new machine. Differences in Broadwell and Skylake chips, for example, didn't seem big enough to matter. Generation difference became less perceivable during this time. AMD had just release a new series of CPUs where you acquired double the CPU for ⅓ less money compared to Intel. Intel had just released Kaby Lake 4Core 8Thread CPUs. It wasn't long after that AMD release 8-Core 16-thread CPUs for less money and the single-threaded performance difference wasn't far off. Intel was still winning on Single-Threaded performance, but not for long.

Less than a year after Zen 1 (Ryzen 1XXX 14nm) released an Intel CPU Security flaw was discovered. The flaw known as Meltdown was found to allow programs to access other places in memory. While that doesn't sound scary at first, the actual result was a nightmare. Passwords, Credit Card numbers, any of your secrets could be picked out of memory and passed on to an attacker. Nothing was isolated and nothing was safe. This flaw was a result of Intel's chip designers chasing performance and neglecting security. It was a hardware design that couldn't be easily fixed. Software patching to avoid the flaw and be more secure typically resulted in 5-30% performance loss with an average performance loss of over 12%. After that AMD had become a little more competitive as Intel's single-threaded performance was only about 8%-10% different from AMD. Thats a lot to gamers, but not much to the rest of the world. It didn't stop there as Spectre was also discovered, attacking ALL CPUs based on speculative execution. ARM and AMD lost as little as 1-3% performance from the patching, but Intel was not so lucky losing ~15% performance from the required patch which wasn't considered fool proof and the guaranteed fix was to disable Intel's HyperThreading technology resulting in 20+% loss of performance.. AMD then released Zen+ (2XXX series 12nm) which improved upon original Zen giving a boost of ~10% performance. The only winning feature Intel had left was gaming with the ability to output more Frames Per Second. In 2018, MDS/Zombieload were discovered once again affecting Intel and AMD disproportionately. Then RIDL and Fallout (another MDS attack) were discovered as a hardware design flaw that affected Intel chips allowing cross application or Cross VM data theft. Patching for AMD (who wasn't affected by all variants) resulted in ~3% performance loss, but Intel once again lost another ~16% performance to patch all the flaws. Then SWAPGS was discovered to only affect newer Intel Chips on Windows machines and LVI was discovered to attack and leak data from Intel's SGX enclaves. LVI required a redesign of hardware to fix! LVI wasn't the only new flaw to attack Intel SGX as Foreshadow (L1TF) and Snoop were discovered shortly after as well which accessed VMs, Hypervisors, etc. Spectre wasn't done either as Spectre v2 was discovered to affect all chips with SMT/HT. Performance loss once again affected Intel disproportionately. Intel performance was hurt so bad that in some situations the patch penalty was 50% performance loss. The patch was decided to be disabled by default due to how bad performance loss was and it was cheaper and more reliable to just disable Hyper Threading on Intel CPUs which still resulted in massive performance loss. This wasn't the end to Intel's disastrous situation. Many more flaws were found and patched over time. You can read more on them here.

While Intel was performing damage control, AMD released Zen 2 (Ryzen3XXX 7nm) in 2019. It was at this point AMD CPUs had better performance than Intel CPUs a majority of the time. The security patching on Intel really hurt their performance to a point they just weren't able to compete anymore in price/performance ratios. They had to lower their prices and change the marketing campaign to “real world performance” benchmarks because they lost nearly all synthetic benchmarks unless they used processors from different weight classes to stack the odds in their favor (comparing Ryzen 5 to Core i7 instead of Ryzen 7 to Core i7). You could find benchmarks where security mitigations are disabled making Intel look like performance KING, but many of those are called out when people do their research. Intel was losing in power consumption as well as performance. For the first time ever, AMD had been able to take the performance per watt crown and Ryzen Laptops were becoming standard and gaming laptop options. AMD stock has recovered and grown at a rate considered unbelievable previously. The company has been able to afford to put more in R&D and programmers to supply better drivers which was a known issue for their GPU market. It seems everything in on the up and up. You can learn about CPU performance benchmarks at Phoronix.com

¶ CPUs and SOCs

¶ CPUs and the advancing toward SOCs

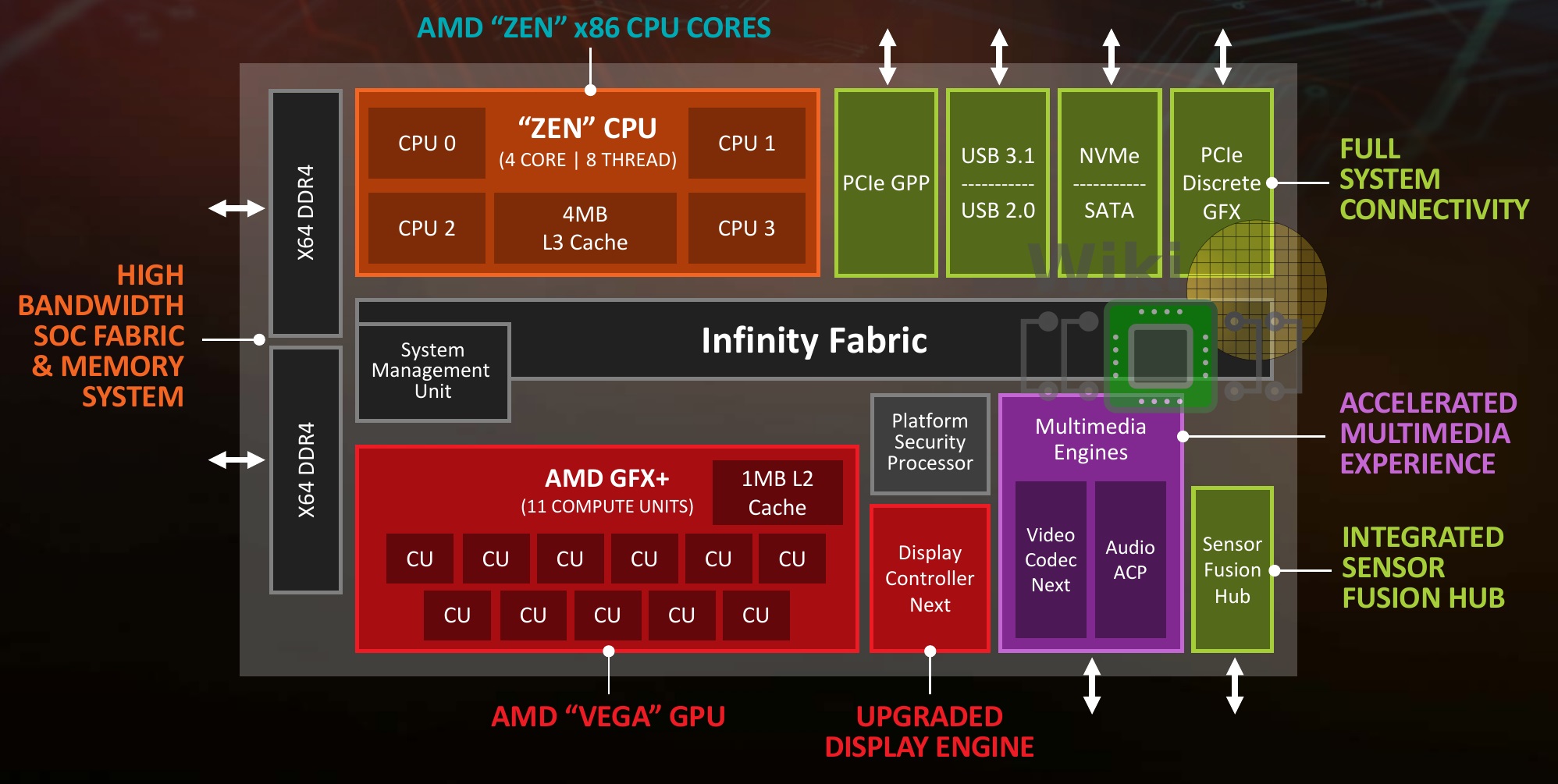

CPUs originally were limited in function supporting only the basic compute function we know them for today. As time has moved forward, more and more features and hardware has moved into the CPU. We tend to refer to chips that contain multiple jobs “SOCs” or Systems On Chips. One example of this is Graphics. There are several CPUs, both Intel and AMD, out there that offer an iGPU (integrated GPU). While they don't compare in performance to Dedicated GPUs (dGPU), not everyone needs the horsepower of a dGPU. Now there is a single chip that offers both CPU and GPU to the user. Lets take this a step further. AMD offers CPUs with a TPM (Trusted Platform Module) in the CPU for trusted certs and encryption. Now we have CPU, GPU, and TPM in a single chip. The TPM may not sound impressive, but if you know how it was implemented, it becomes very interesting and impressive. The TPM (referred to as fTPM in the UEFI BIOS) is actually a smaller ARM RISC CPU with TrustZone TPM technology and the implementation is called a Platform Security coProcessor (PSP for short). So were talking a separate RISC CPU inside a CISC CPU! In addition to having a CPU, GPU, and RISC CPU, you also have an I/O Die for basic Input/Output of SATA and USB connectivity and a slew of sensors!

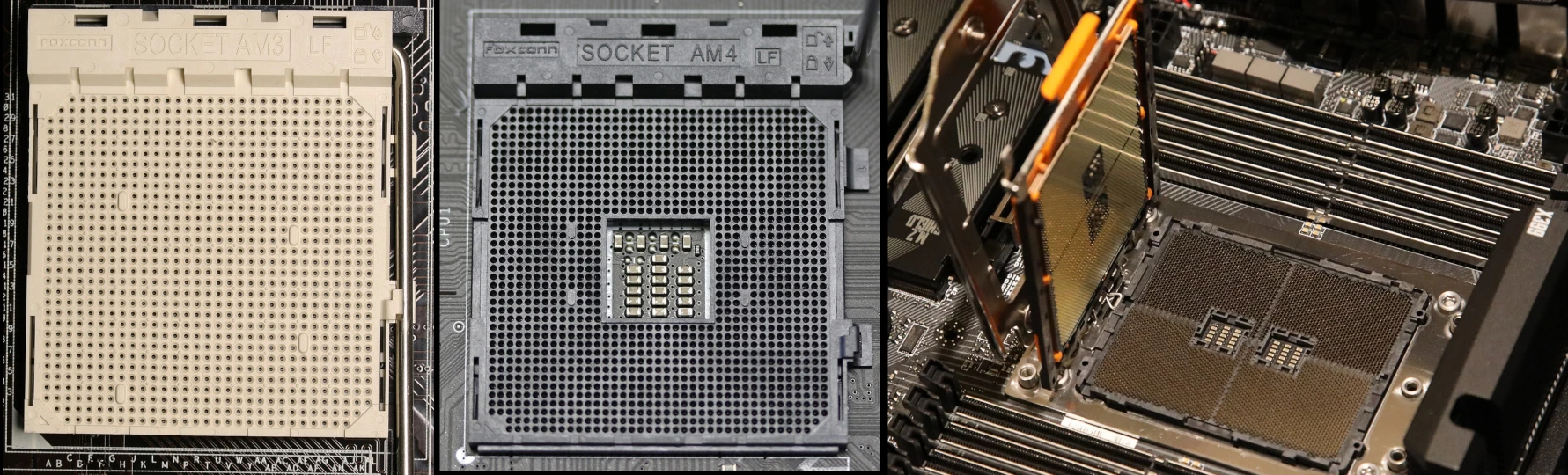

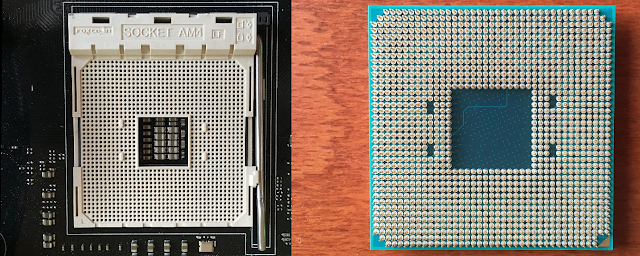

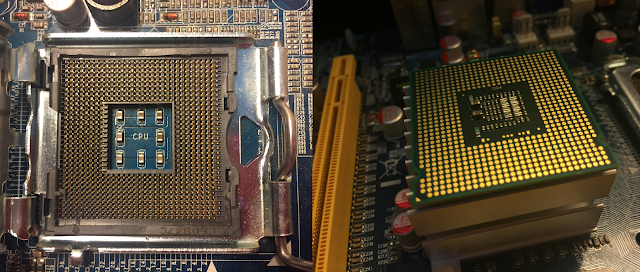

¶ Sockets and Pads

¶ PGA, LGA, and BGA

These are all different methods of connecting a CPU into a full system.

You have the older PGA (Pin-Grid Array) which has pins in the bottom of a CPU that fits in a socket with pin holes.

You also have the newer LGA (Land-Grid Array) which has pads/contacts on the bottom of the CPU requiring spring like pins be in the socket to touch the individual pads.

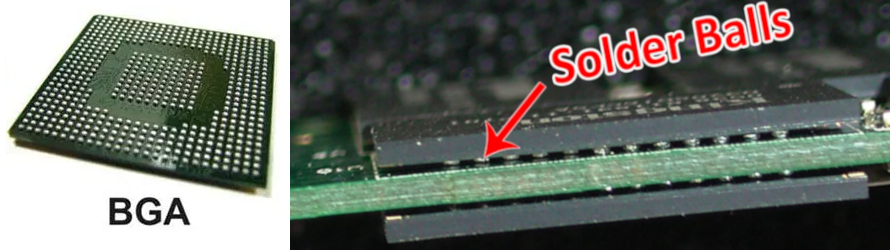

Then you have BGA (Ball-Grid Array) which is used in smaller and mobile electronics. Effectively a method of directly soldering to the board by using small balls of solder on the pads of the CPU and melting/soldering them to pads on a board.

¶ Sockets

Don't be fooled in to thinking CPU and socket choice is a simple as choosing between 3 Grid Arrays. As CPUs have advanced, the number of pins, pads, etc have increased. If that isn't enough, feature changes have also resulted in entire socket changes to support newer hardware and to lock out unsupported hardware. Pin numbers also aren't simple to distinguish just on visibility. You need to pay very close attention to the CPU you wish to buy and the corresponding socket required.